After much anticipation, we have finally got to the topic that I

keep on mentioning, but never explain – interrupts! Interrupts are

probably the single most important concept that make every electronic

device work as it does. If interrupts didn’t exist, your electronic

devices wouldn’t be as responsive, fast and efficient as they are. They

are the source for all types of timers and ticks and integrated into

most peripherals. Every CPU has interrupt capabilities, although the

capabilities vary widely. Interrupts are sometimes neglected because its

‘easier’ to poll than implement interrupts but its generally considered

poor design. So what are interrupts? They are really just a way for the

hardware to signal the software that some event has occurred. Each

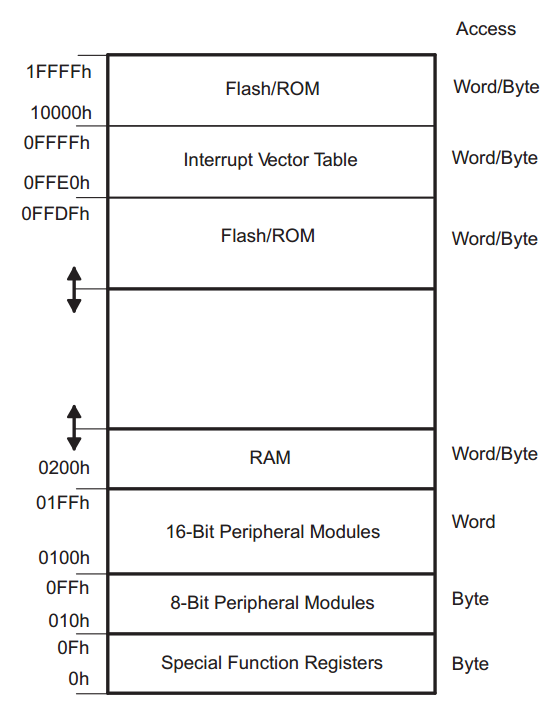

event has an entry in what is called the interrupt vector table – a

table of pointers to functions stored in memory (either flash or RAM),

which the CPU will jump to automatically when the interrupt fires. These

functions are called interrupt service routines (ISR), and though some

may be included as part of the compiler libraries, most need to be

implemented by the programmer. Interrupt service routines must be as

quick and efficient as possible, so as to not stall the software which

was executing. They must also be efficient with the stack. In operating

system environments, interrupt handlers may operate on a separate

[software] stack which is much smaller than a typical process or thread

stack. Also, no blocking calls should be made. Taking too long in the

ISR can result in lost data or events. Interrupts have attributes that

may or may be programmable, depending on the architecture and the

device. In this lesson, we will learn about the different types of

interrupts, their attributes, how to implement them, what happens when

an interrupt fires, and how they are used in real world applications.

The generic interrupt vector table is available in table 2-1 of the

family reference manual, while device specific vectors are defined in

table 5 of the datasheet.

Most interrupts can be enabled or disabled by software. Typically

there is a register that will perform the function globally for all

interrupts or groups of interrupts, and then additional registers for

individual interrupts. Disabling an interrupt is often referred to as

‘masking’ the interrupt. Interrupts are almost always accompanied by a

status or flag register which the software can read to determine if a

specific interrupt has fired. This is required because sometimes many

physical interrupts are connected to the same interrupt request (IRQ) –

the signal to the CPU. The flag is required because the interrupt line

may only be active for a very short amount of time and the software may

not respond fast enough. The flag ensures the the state of the interrupt

is stored somewhere until it is read and cleared by the software. It is

also often used to acknowledge and clear the interrupt, as is the case

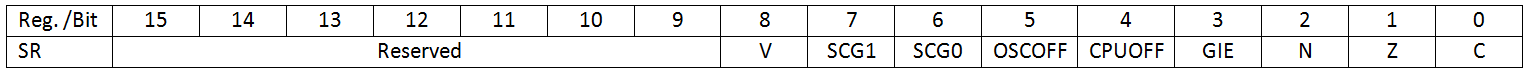

with the MSP430. Enabling and disabling interrupts in the MSP430 at a

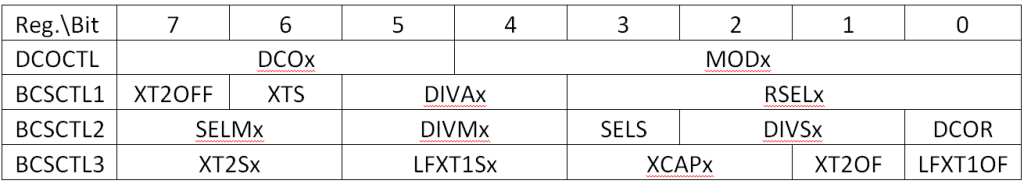

global level is done through the status register. We have not looked in

detail at the contents of the status register, so lets do that now.

As mentioned above, GIE is the field used to enable or disable

maskable interrupts on the device. The compiler provides intrinsic

functions to do this in C, __enable_interrupts and __disable_interrupts.

Maskable interrupts are disabled by default on reset, so the software

must enable them to use them. Not all interrupts can be disabled. These

are called non-maskable interrupts, or NMI. There are usually some

interrupts on a device that are reserved as NMIs. NMIs are typically

critical errors to which the software must respond and handle in order

to continue execution. The MSP430 has three NMIs.

Sometimes these types of interrupts are referred to as exceptions.

Exceptions can also be raised due to some non-recoverable fault. It is a

similar concept to a software exception, but implemented in hardware.

On the MSP430, there is one non-recoverable exception, the illegal

instruction fetch, which causes a reset. On some architectures, this

exception can be handled and the handler may increment the program

counter to the next address to try and skip over it. Other examples of

exceptions which exist on other architectures are the divide by zero and

invalid memory access. Although not documented as an exception, the

MSP430 does source the reset vector if attempting to execute from an

invalid memory space such as an address not in RAM or flash.

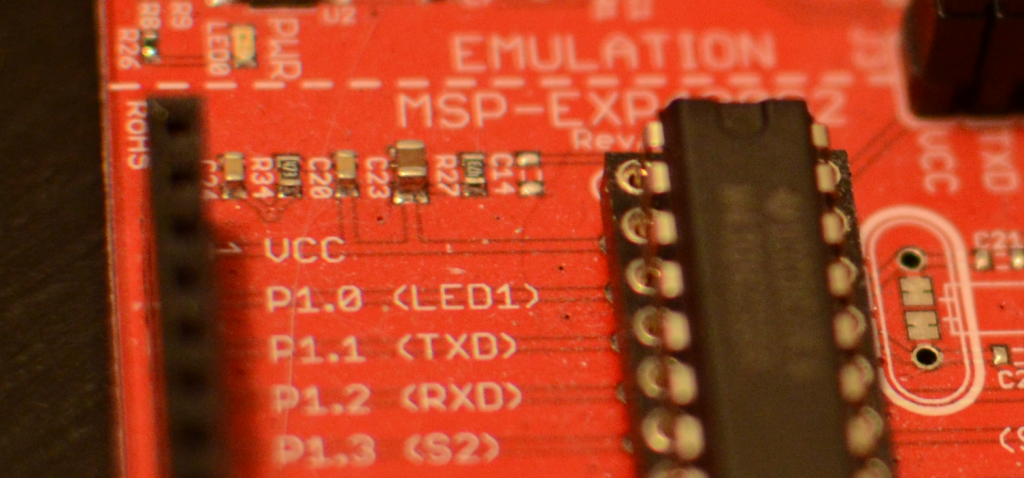

One very common use for interrupts is to detect changes in GPIO

inputs. Stemming from our push button code which has to poll the P1IN

register, enabling an interrupt on a GPIO would allow the hardware to

signal the software when the input has changed values. Not all GPIOs are

interrupt capable, so remember to check in the device datasheet when

choosing which pin to connect it to. GPIO interrupts have their own

subset of properties. The first property is the active signal level –

either active high or active low. Active high means that the signal is

interpreted as active when the line is high (a positive voltage), while

active low means the signal is interpreted as active when the line is

low (0V or ground). However, the second property, edge vs level based

(also known as edge or level triggered interrupts), defines when the

interrupt actually fires. Edge based interrupts will only fire once as

the state of the line transitions from the inactive level to the active

level. Level based interrupts will fire continuously while the input is

at the active level. In order to handle a level based interrupt, the ISR

should mask it so that it does not fire continuously and effectively

stall the CPU. Once the source of the interrupt is cleared, the

interrupt may be enabled so it can fire again. Level based interrupts

are sometimes used to perform handshaking between the source of the

interrupt and the software handling it. The software would enter the

ISR, check the status of the source of the interrupt to determine

exactly what caused the interrupt (remember multiple sources per IRQ),

and then clear source condition and the flag. If the interrupt condition

is successfully cleared, the line will return to the inactive state and

the software will continue on. Otherwise, the line will remain active

and the interrupt would fire again. The MSP430 only supports edge

based interrupts.

Another important attribute of interrupts is the priority. Interrupt

priorities determine which interrupt service routine will be called

first if two interrupts fire at the same time. Depending on the

interrupt controller, some interrupt priorities may be configurable by

software. The MSP430 does not support this, all interrupts have a fixed

priority. The interrupt priorities on the MSP430 are in descending order

from highest address in the vector table to the lowest. One important

concept related to interrupt priorities is interrupt nesting. If one

interrupt fires and the ISR is invoked, and while in the ISR another

interrupt of a high priority fires, the executing ISR may be interrupted

by the higher priority one. Once the higher priority ISR has completed,

the lower priority ISR is then allowed to complete. In the case of the

MSP430, nesting is not dependant on the priority. Any priority interrupt

will be serviced immediately if nested interrupts are enabled. Nesting

interrupts is an advanced topic an will not be enabled for this

tutorial. If an interrupt fires while an ISR is executing, it will be

serviced only once the ISR is complete.

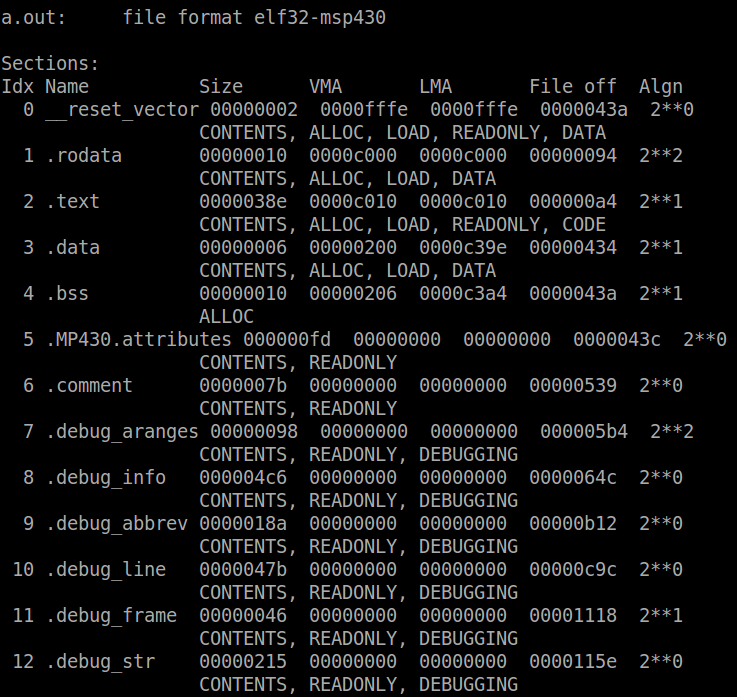

The reset vector is the single most important interrupt available.

Without it, code would never begin executing. The compiler [typically]

automatically populates the reset vector with the address of the entry

point of the .text section, i.e. the __start function. When a device is

powered on or the reset pin is toggled, the reset vector is sourced and

the CPU jumps to the address it contains. Going back to the linker

script from last lesson, the table of memory regions has a region

defined for each interrupt vector. A region called RESETVEC is located

at 0xFFFE, defined with a size of 2 bytes. Then a section called

__reset_vector is created and assigned to this memory location. The data

allocated to this section will be the address of the reset vector ISR.

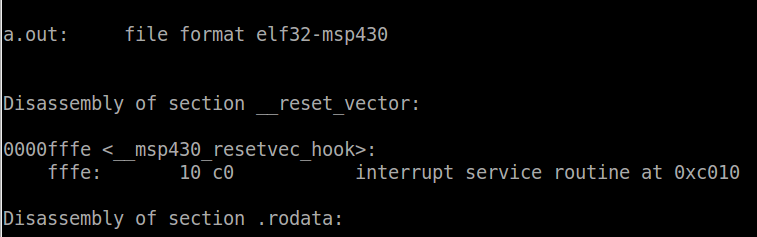

To show that the reset vector is assigned the start-up code, the

following command can be used:

Passing the argument -D to objdump is similar to -S, but it dumps the

disassembly for all sections rather than just the .text section as -S

does. This is required because as mentioned above, the reset vector is

part of its own section, not the .text. The output of this command will

look like this:

The first couple lines of code are all we are interested in. We can

see that the first section in the output is the reset vector. In it

there is only one label: ‘__msp430_resetvec_hook’. At this label there

is 2 bytes of data allocated with the value of 0xc010 (keep in mind the

endianess). Search for 0xc010, and you will see that this is the address

of __start, the entry point. Therefore as expected, when sourced the

reset vector shall cause the CPU to jump to the start-up code.

The watchdog is another very important interrupt. It is actually a

whole module which is implemented as part of almost every MCU, SoC, etc…

The purpose of the watchdog is to ensure that the software is running

and had not stopped, crashed or otherwise been suspended. It is very

rare that you find an embedded system without a watchdog. If implemented

correctly in both hardware and software, the device should never stall

indefinitely. Once enabling the watchdog, the software must pet it

periodically with an interval less than its timeout. Failure to do so

will result in the watchdog resetting the device. In the case of the

MSP430, the watchdog interrupt is the same as the reset vector, so a

watchdog interrupt will reset the device. Some devices have more

complex watchdogs which generate an intermediate interrupt to allow the

software to perform any logging or cleanup operations before resetting.

The MSP430 watchdog module does not support this, but it has an

additional interesting feature – it can be configured as a regular

16-bit timer. This could be useful if an additional timer if needed. We

will be configuring the watchdog in watchdog mode because it is

important to understand how to setup the module and how it should be

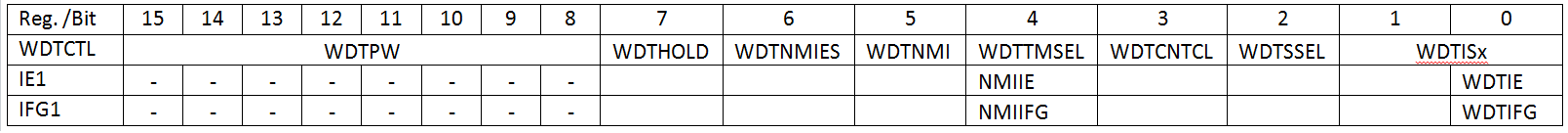

maintained by the software. The watchdog is configured and monitored

through the 16-bit register WDTCTL and two additional registers,

interrupt enable 1 (IE1) and interrupt flag 1 (IFG1).

The blank fields of IE1 and IFG1 registers are determined by the

specific device and will be covered as needed. Now lets use what we have

learned to disable and enable the watchdog timer in our existing code

and watch the device reset. Below is a set of a set of functions to

perform these actions.

The first function disables the watchdog exactly as we do currently

in our main function. The _disable_watchdog function should be called

right at the beginning of your code in order to avoid accidentally

generating a reset when modifying the configuration. You can go ahead

and replace the existing watchdog code in main with a call to this

function. In the enable function, the watchdog timer interrupt flag is

read and cleared if required. If the flag is set, some action could be

performed such as logging the number of watchdog resets but we have no

need at this time. Then _watchdog_pet is called. Petting the watchdog

will effectively enable it as well. Only two fields are set in the

WDTCTL register, the password field WTDPW and the clear counter bit

WDTCNTCL. The watchdog will be enabled since the WDTHOLD bit is cleared.

The timeout of the watchdog timer is determined by the clock source

select bit WDTSSEL and the interval select field WDTISx. This means the

watchdog timer will source its clock from ACLK and expire after 32768

clock cycles. In

we

did not configure ACLK but now we must. ACLK should be configured to be

sourced from VLOCLK, which is approximately 12kHz. If we tried to

source it from MCLK or SMCLK, the timeout would be too short for the

delay required by the blinking LED. To configure ACLK, in main we must

add a new line under the clock configuration.

Since the interval selector is set to 32768 clock cycles at 12kHz,

the timeout of the watchdog will be 2.73s. Therefore the watchdog must

be pet at least every 2.73s. Since our loop delay 500ms each iteration,

we are within the requirements of the watchdog. Now lets see that the

watchdog actually fires and resets the device. Call the watchdog enable

function right after detecting the button press.

Typically you would want to enable the watchdog as soon as possible,

especially before any infinite loops. In case this I want you to control

when the watchdog is enabled so that you can see when it resets.

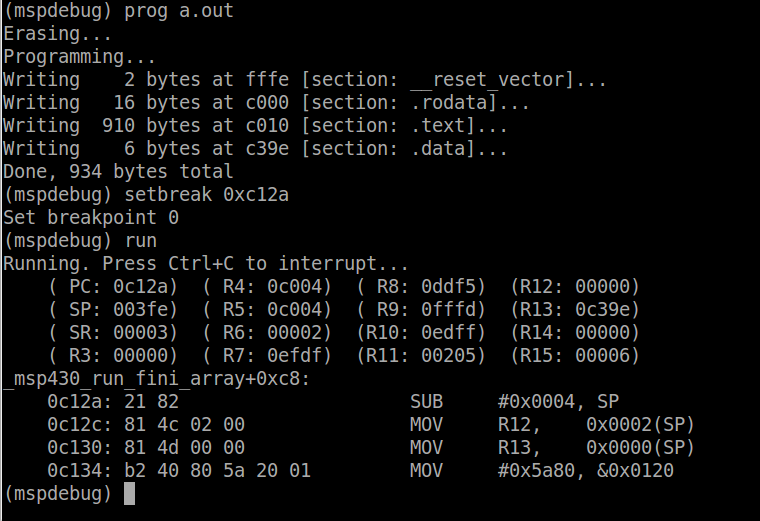

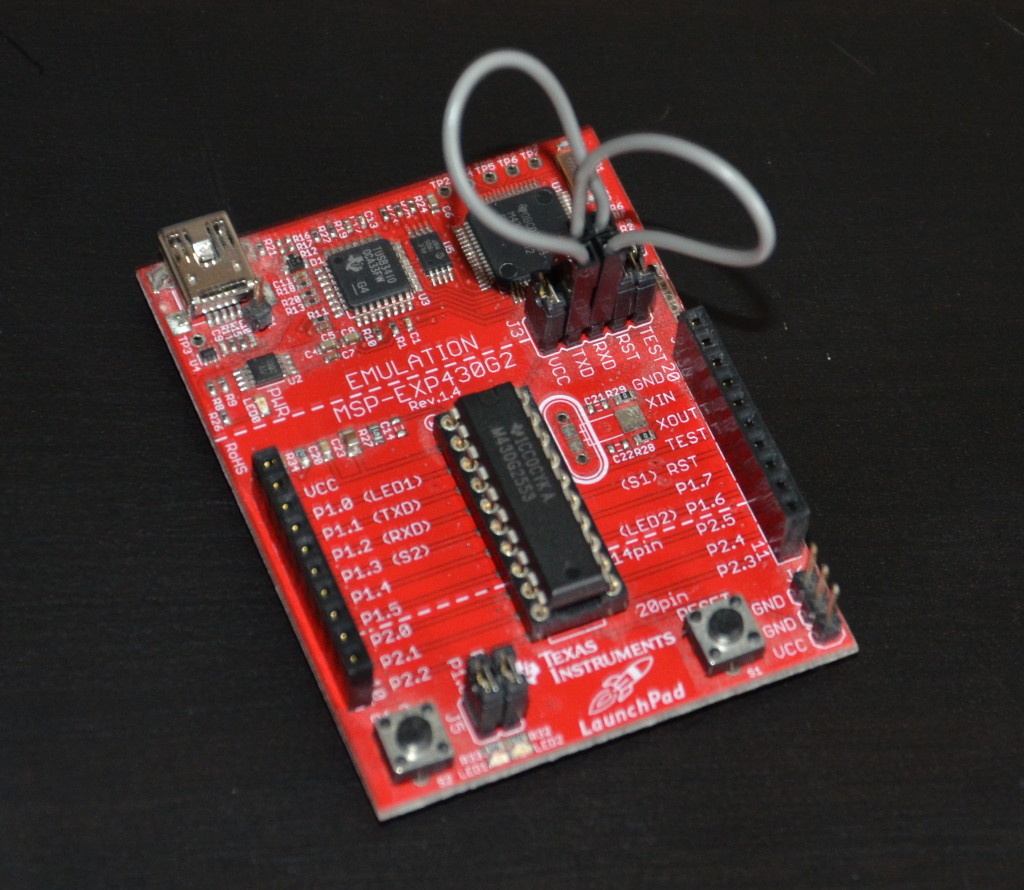

Compile the code and program it to the device. Set a breakpoint at the

new function _watchdog_enable (use nm to find the address). Run the code

and press the button. When it stops at the breakpoint run it and press

the button again. The breakpoint will be hit again. This shows that the

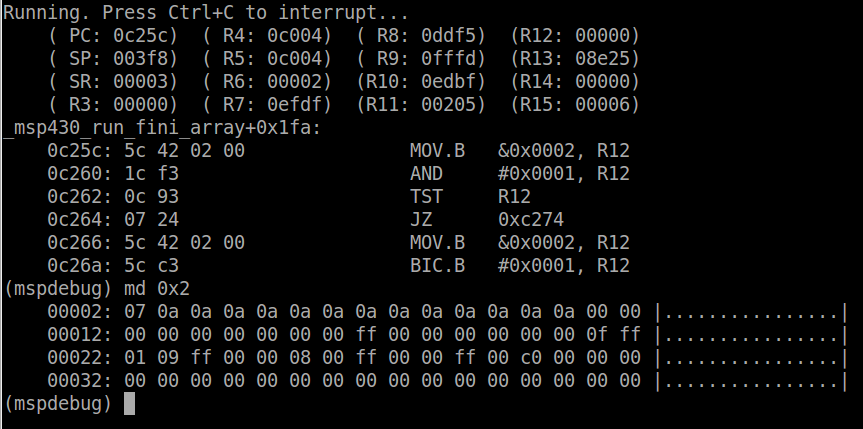

device is restarting. To verify that it is because of the watchdog, we

can read the register IFG1 and see if WDTIFG is set. IFG1 is located at

address 0x02 as indicated in both the datasheet and the family reference

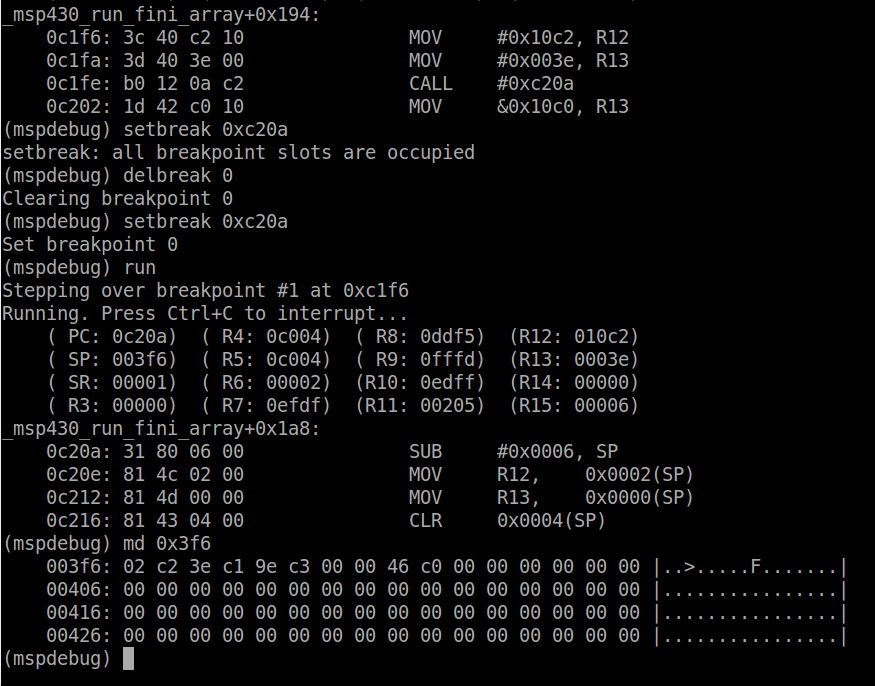

manual. Read this address using the md command. You should see the

following:

The value of the IE1 is 0x7, therefore the WDTIFG bit is set. It is

important to note that the other bits which are set are defined by the

device specific implementation which can be found in the datasheet. They

are PORIFG (bit 2), the power on reset interrupt flag, and OFIFG (bit

1), the oscillator fault interrupt flag. These two bits are set because

that is their default value after reset. Now we will pet the watchdog to

prevent it from resetting the device. Call _watchdog_pet inside the

while loop that toggles the LED.

Compile the code and program the board. Press the button to enable

the watchdog and notice that now the board does not reset (note the

address of the breakpoint will need to be adjusted since you added new

code). And that’s basically how to pet a watchdog. As we add more code

to our project, we will see how the watchdog needs to be managed to

ensure that it only trips if there is a failure and not because the code

is waiting for some input.

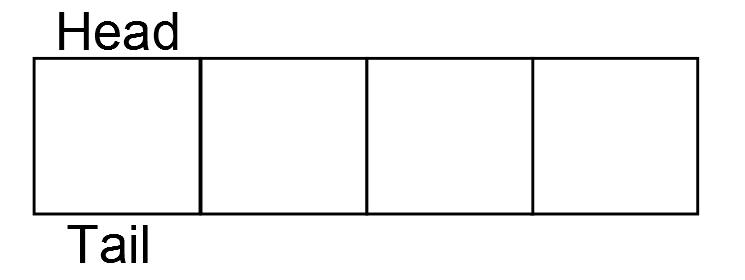

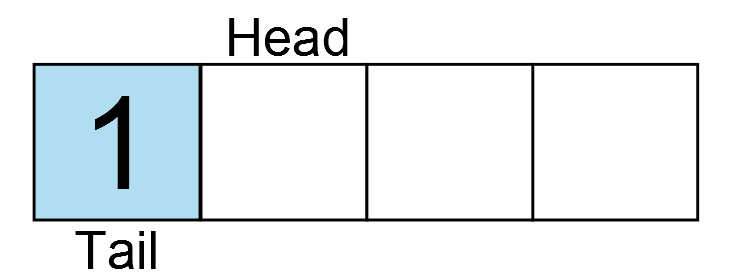

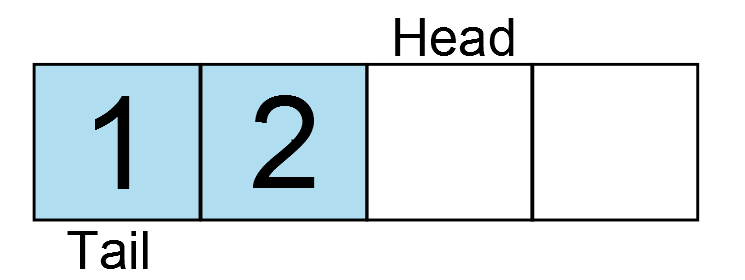

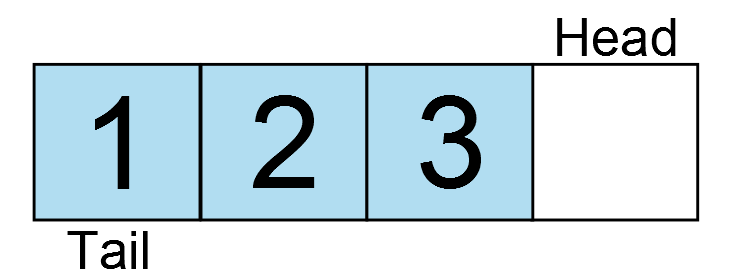

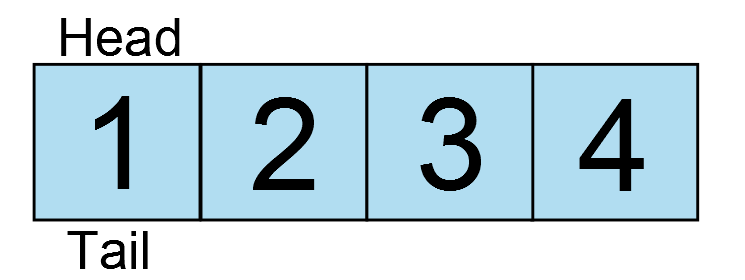

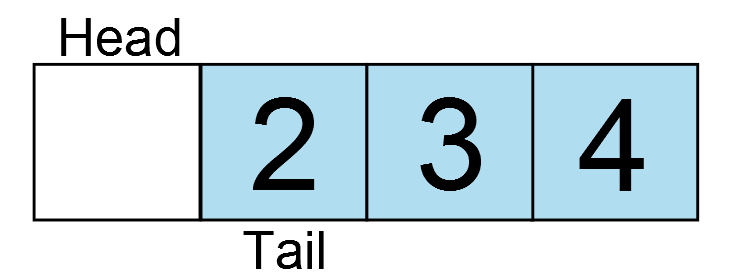

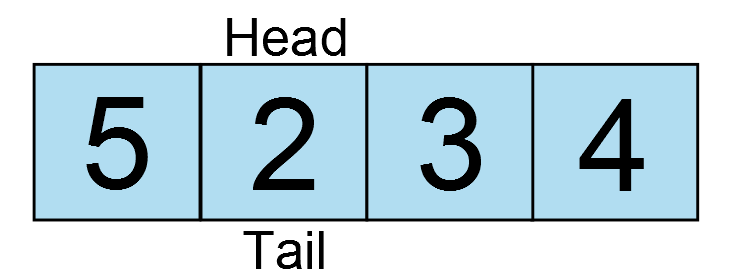

Now we will get into the details of interrupt handling on the MSP430.

When an interrupt fires, a few things have to happen before entering

the ISR:

Now the CPU can begin executing the ISR. All of this happens in

hardware, no software is involved so you will never see this in code. It

can be inspected by using the debugger however, and we will see this

shortly. The time starting from when the interrupt is triggered to the

time when the ISR is invoked, is called the interrupt latency. In the

case of the MSP430, the interrupt latency is 6 clock cycles.

The ISR has some responsibilities of its own before executing the

application code. If written in C, this is taken care of by the

compiler. In assembly it must be implemented manually. To see how this

works, we are going to create an interrupt service routine in C to

detect the button press. Unfortunately, there is no way defined by the C

programming language on how to declare an interrupt handler, it is left

up to the compiler. Therefore what you will learn is only applicable

for gcc and may look slightly different on other compilers. To declare

an ISR in gcc you must use the ‘attributes’ feature. Attributes provide

additional functionality which are non-standard. When possible, using

attributes should be avoided so that the code is compiler agnostic (read

– portable from one compiler to another). There are some attributes

which are common across compilers and it is often considered good

practice to define them as macros in a separate header file (often name

compiler.h) which uses hash defines to compile the macros relevant for

that compiler. To declare the interrupt service routine, we first need

to figure out which interrupt we are going to write an ISR for. On the

MSP430, each IO port is assigned an interrupt. It is up to the software

to determine which pin on the port was the source. So how so do we refer

to this interrupt? Open the msp430g2553.h header file and search for

‘PORT1_VECTOR’. You will find the list of interrupt vectors for this

device. The desired vector should be passed to the interrupt attribute.

It tells the compiler in which section to place the address of your ISR.

Therefore, our empty function declaration would look like this:

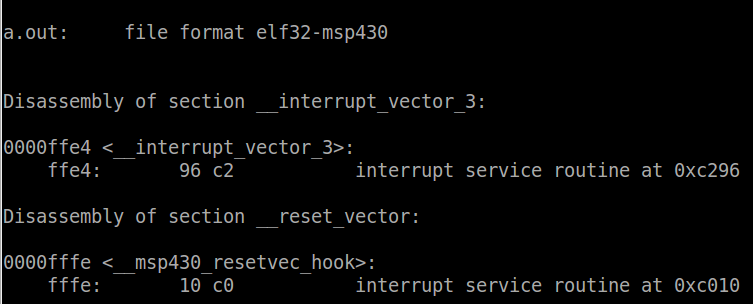

ISRs must have a return type of void and have no arguments. Lets see

what has been generated using the the objdump command from earlier to

disassemble the code.

We can see that a new section has been added called

__interrupt_vector_3. To understand this we need to go to the datasheet

and find the address of the interrupt vector for port 1 (since our

button is connected to P1.3). The vector is located at address 0xFFE4.

In the linker script memory region table, this address is part of the

region VECT3. In the sections table, we can see that the section

__interrupt_vector_3 is loaded into region VECT3. This means that when

passing vector 3 (which PORT1_VECTOR is defined as) to the interrupt

attribute, the compiler will place the address of that function at

0xFFE4. From the objdump output, we can see the value at 0xFFE4 is

0xC278. Search in the rest of the file for this address and you will

find that it is in fact our function port1_isr. Currently the function

is empty, so lets go back and fill it in. In order to start or stop the

the blinking LED using the push button, we will need some sort of signal

between our main function and the ISR. We will use a simple variable

which will be toggled by the ISR.

For some of you volatile may be a new keyword that you have not used

before. Volatile tells the compiler that the value of that variable may

be changed at any time, without any nearby code changing it. This is the

case for an ISR. The compiler cannot know when the ISR is going to

fire, so every time the variable is accessed, it absolutely must read

the value from memory. This is required because compilers are smart, and

if they determine that nothing nearby can change the value, it may

optimize the code in such a way that the value will not be read. In this

case, that change will occur in the ISR so it must be read every single

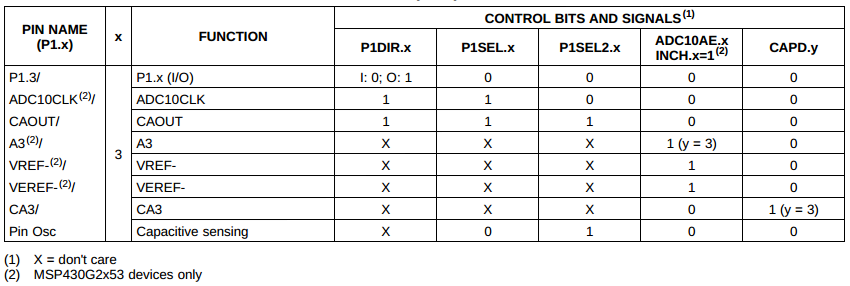

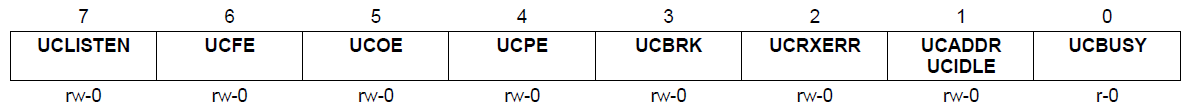

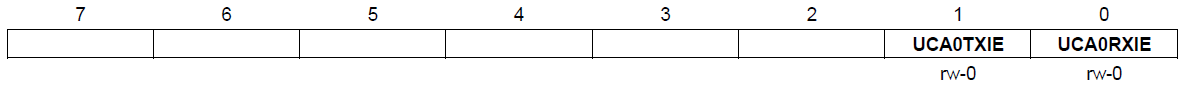

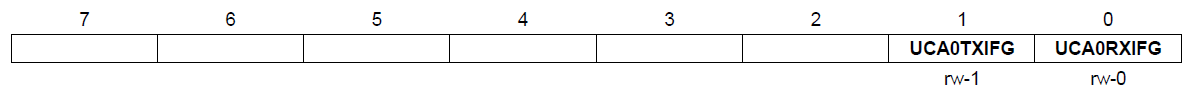

time. Next we have to introduce a few new registers, PxIES, PxIE and

PxIFG (where ‘x’ is the port number). All three of these register follow

the same bit-to-pin convention as the other port configuration

registers we have previously discussed. The latter two are similar to

IE1 and IE2, they are the port interrupt enable and port interrupt flag

registers. PxIES is the interrupt edge select registers where a bit set

to 0 signals an interrupt for a low-to-high transition (active-high) and

a bit set to 1 signals an interrupt on a high-to-low transition

(active-low). Now that we have covered how to configure the interrupts,

let’s modify our code to use it. First, instead of waiting in a while

loop to start the LED blinking, let the interrupt handler enable it, so

remove the first while loop. The interrupt should be configured before

the watchdog is enabled. Since the button is on P1.3 and it is

pulled-up, we want to set the interrupt to occur on a high-to-low

transition so bit 3 in PIES and P1IE should be set high. Finally, enable

interrupts using the intrinsic function __enable_interrupt. In the

while loop, modify the code to only blink the LED when _blink_enable is

non-zero. Your code should look something like this:

Now for the ISR. Since one interrupt is sourced for all the pins on

the port, the ISR should check that P1.3 was really the source of the

interrupt. To do so, we must read bit 3 of P1IFG and it is high, toggle

_blink_enable and then return.

All of these changes are available in the latest lesson_6 tag on

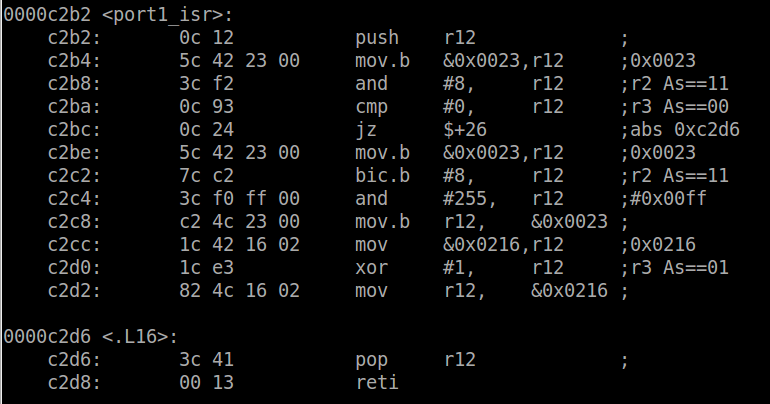

github. Recompile the code and use objdump to inspect the ISR. The

address of the ISR can be found by looking at the contents of the

__interrupt_vector_3 section from objdump as we did for the reset

vector.

The first operation is to push R12 onto the stack. R12 is the

register the compiler is going to use to toggle the variable

_blink_enable. It must ensure that the previous value is stored because

it may be used by the function which has been interrupted. If the ISR

clobbers the register value, when the interrupted function continues

execution, the register would have the wrong value. This applies to all

registers and it is up to the compiler to determine which registers are

used by the ISR and thus must be pushed on the stack. In this case only

R12 is being used, so the most efficient implementation is to push only

R12. Now that R12 is free to use, it can be used to check the value of

P1IFG and ensure the flag is set. Then in order to acknowledge the

interrupt so it doesn’t fire again, the flag must be cleared. The

address of P1IFG (0x23) is loaded into R12 and bit 3 is cleared. Finally

the address of our variable _blink_enable is loaded into the register

and the value is XOR’d with 0x1. The stack is popped back into R12 to

restore the initial value before returning. To return from an interrupt,

the RETI pseudo-instruction is used. RETI, which stands from return

from interrupt, tells the CPU to pop off the SR and PC values back into

their respective registers. Now that all the registers are exactly as

they were before the interrupt fired, the flow of execution continues on

as if nothing happened. Now program the device and run the new code.

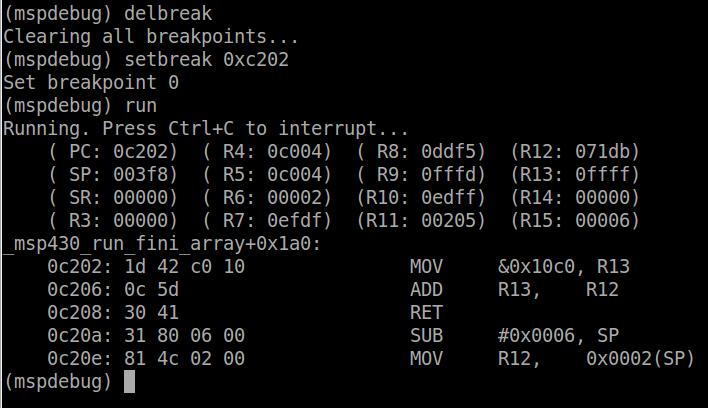

The LED will begin blinking once the button is pressed, and pressing the

button again will toggle the blinking on or off. Set a breakpoint at

port1_isr. When the button is pressed the CPU will be stopped at

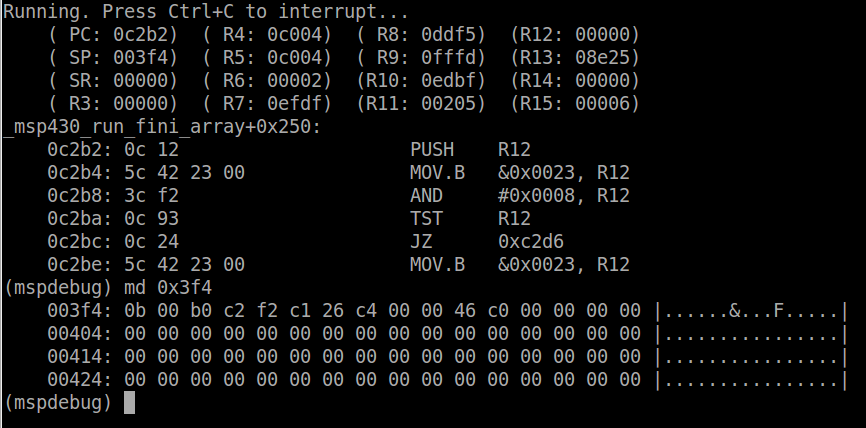

the ISR. The stack pointer is set to ~0x3f4 (depending where exactly the

code was interrupted) and dumping the memory at this location, we can

see the result of entering the ISR.

The first 2 bytes on the stack will be the SR. The value of the

status register stored in the stack will be different from that

currently dumped by mspdebug since it is cleared by the hardware. The

next 2 bytes will be the PC, in this case 0xc2b0. Using objdump to view

the code at this address, we can see that the CPU was interrupted right

at the end of _watchdog_pet, which is called within the while loop, as

expected. Your values may differ slightly depending where exactly the PC

was when you pressed the button. What we really have experienced here

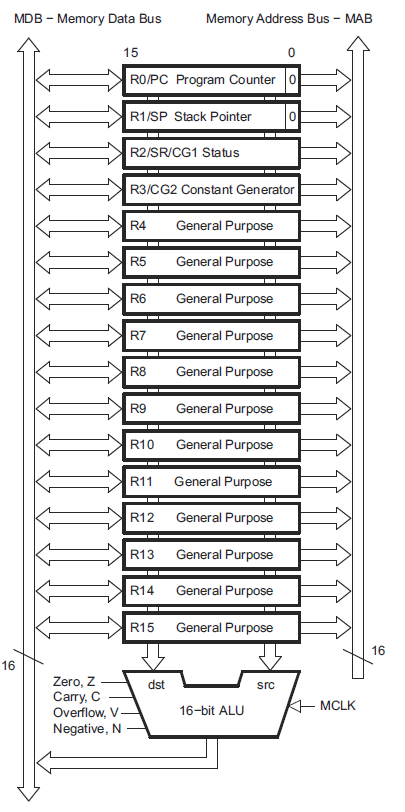

is something commonly known as a context switch. It is the foundation of

all software, not just embedded systems. Almost every operating system

uses context switching to some extent, usually triggered by an interval

timer firing an interrupt periodically. This is what allows you have to

many threads and processes running on just one CPU. Its the illusion of

parallel operation through the use of extremely fast context changes

many times per second. The definition of the context is dependent on the

architecture. In the case of the MSP430, it is all the main CPU

registers we have discussed in the last lesson. When the ISR fires, we

have to save all of these registers that could be modified so when the

interrupt completes, the context can be restored. A context switch of a

thread or task (synonymous terms) would have to save all the registers

on its stack before restoring the registers for the task about to run.

There is some really interesting stuff here, and this is only an

introduction. We will not be creating a multi-threaded system since that

is way beyond the scope of this course, but interrupts are a form of

context switch so it is important to understand how powerful and

important they really are in embedded systems.

In this lesson we are going to go on a bit of a tangent and take

care of some housekeeping duties to accommodate our growing code base.

Until now, we have been writing all the code in a single file, and

compiling by invoking gcc on the command line. Clearly neither of the

are scalable nor feasible solutions for for a full embedded development

project. We need to separate our code into logical modules, create

header files will APIs to interface with them and introduce a new tool

that will help us maintain our build system and environment. This tool

is called ‘make’. Make is GNU utility which is the de-facto standard in

the open source community for managing build environments. Most IDEs

(notably Eclipse and its derivatives) use make or a form a make to

manage the build. To the user its looks like a series of files in a

folder which get compiled when you press build, but under the hood a

series of scripts are being invoked by make which does all the real

work. Make does several things which we will look at in this tutorial.

First, it allows you to defined compiles rules, so instead of invoking

gcc from the command line manually, you can script it so it passes in

the files and the compiler options automatically. It allows you to

better organize your code into directories, for example, one directory

for source files, one for header files and another one for the build

output. And finally, it can be used to track dependencies between files –

in other words, not all files need to be recompiled every time and this

tool will determine which files need to be compiled with the help of

some rules we will add to the script. Make is an extremely powerful

tool, so we will just scratch the surface in this tutorial to get us

started. But before we jump into make, we must start by cleaning up our

code.

Reorganizing the code

As you know, all the code we have written is currently in one file,

main.c. For such a small project, this is possibly acceptable. However

for any real project functions should be divided into modules with well

defined APIs in header files. Also, we do not want to have a flat

directory structure so we must organize the code into directories. The

directory structure we will start with is going to be simple yet

expandable. We will create three directories:

- src: where all the source files go

- include: where all the header files go

- build: where all the output files go

Create the new directories now.

cd ~/msp430_launchpad

mkdir src

mkdir include

The build directory will actually be generated by the build

automatically, so we don’t have to create it manually. You rarely check

in the built objects or binary files into the SCM (git) so the build

directory should be added to the .gitignore file. Open the .gitignore

file (a period in front of a filename means it is hidden in Linux – you

can see it with the command ls -al) and on the next line after ‘*.out’

add ‘build/’, save and close. You will not see the new directories under

git status until there is a file in them as git ignores empty

directories. Move main.c into the src/ directory.

mv main.c src

Open main.c in your editor and lets take a look at how we can

separate this file into modules. The main function is like your

application, think of it as your project specific code. Start by asking,

what does this file need to do? What tasks does it perform? Let break

this down, the main program needs:

- enable / disable / pet the watchdog

- verify the calibration data

- set up the clocks

- initialize the pins

- perform the infinite loop which is the body of the application

To enable / disable / pet the watchdog, does the main program simply

need to invoke the functions that we wrote, or does it make sense that

it has knowledge of the watchdog implementation. Does it need to know

anything about the watchdog control register and what their

functionality is? No, not at all, it simply needs to be able to invoke

those functions. From the perspective of the application the watchdog

functions could be stubs. That would be a pretty useless watchdog, but

it would satisfy the requirements of main. The implementation of the

watchdog is irrelevant.

Verifying the calibration data is another example of code that the

main application is not required to know about. In fact, it is safe to

say that the only piece of code which relies on this check is setting up

the clock module. Speaking of which, does the application need to know

how the clocks are set up? Not really. Maybe it will need to know the

speed of the clocks in order to configure some peripherals, but not the

actual implementation of the DCO configuration. Those are board

specific, not application specific.

Finally the pin configuration. The application does rely on the pins

being configured correctly in order to read and write to them, but the

pin muxing needs to be done only once and again, depends on the board.

The application could choose to use them or not. Therefore the pin

muxing could be considered part of the board initialization. Hopefully

you see where we are going with this. We are trying to categorize

certain functionalities so that we can create reusable modules. It isn’t

always so straight cut and clear, and often takes experience and many

iterations to figure out what works, but when done properly, the code

will be much more maintainable and portable. In our case we have defined

the following modules:

- board initialization

- clock module initialization

- pin muxing / configuration

- watchdog

- TLV configuration data

- application

We could abstract this even further by creating separate modules for

clock configuration and pin muxing but there is no need at this point.

Its good practice to modularize your code, but only to a certain extent.

Abstract it too much without justification, and you made more work for

yourself and more complicated code for no good reason. Try to find a

middle ground that satisfies both your time and effort constraints but

still produces nice clean code (we will look at what that means

throughout the tutorials). Remember, you can always refactor later, so

it doesn’t have to be 100% the best code ever the first time around.

So lets take a look at what new header files and APIs we will have to

introduce to modularize our code as described above. Based on the code

we have written already, we can separate the APIs defined into new

source and header files. The first API to look at is the watchdog. There

are three watchdog functions in our code at this point. Since they will

no longer be static, we can remove their static declarations from

main.c and move them into a new file called watchdog.h which will be

located in the include directory. We will also remove the leading

underscore to indicate that they are public functions. As a note for

good coding practice, it is easiest for someone reading your code when

the prefix of your functions match the filename of the header containing

them, for example, watchdog_enable would be in watchdog.h. Yes the IDEs

can find the function for you and you don’t have to search for

anything, but there is no reason to mismatch naming conventions. So now,

our watchdog.h file will look like this:

#ifndef __WATCHDOG_H__

#define __WATCHDOG_H__

/**

* brief Disable the watchdog timer module

*/

void watchdog_disable(void);

/**

* brief Enable the watchdog timer module

* The watchdog timeout is set to an interval of 32768 cycles

*/

void watchdog_enable(void);

/**

* brief Pet the watchdog

*/

void watchdog_pet(void);

#endif /* __WATCHDOG_H__ */

Notice how when we create public functions that are defined in header

files we will always document them. This is considered good practice

and should be done consistently. These are extremely simple functions so

not much documentation is required. Obviously a more complex function

with parameters and a return code will have more information, but try to

keep it as simple as possible for the reader without revealing too much

about the internal workings of the function. This also leads to the

concept of not changing your APIs. Changing the API should be avoided,

as well as changing any behaviour with the external world. The expected

behaviour should be well defined, although the implementation can be

changed as required. Therefore your comments will have minimal changes

as well.

Now, we need to cut the function definitions out from main.c and move

them to a new file called watchdog.c under the src/ directory. Remember

to change the functions to match those in the header file. We will also

need need to include watchdog.h as well as msp430.h to access the

register definitions.

#include "watchdog.h"

#include <msp430.h>;

/**

* brief Disable the watchdog timer module

*/

void watchdog_disable(void)

{

/* Hold the watchdog */

WDTCTL = WDTPW + WDTHOLD;

}

/**

* brief Enable the watchdog timer module

* The watchdog timeout is set to an interval of 32768 cycles

*/

void watchdog_enable(void)

{

/* Read the watchdog interrupt flag */

if (IFG1 & WDTIFG) {

/* Clear if set */

IFG1 &= ~WDTIFG;

}

watchdog_pet();

}

/**

* brief Pet the watchdog

*/

void watchdog_pet(void)

{

/**

* Enable the watchdog with following settings

* - sourced by ACLK

* - interval = 32786 / 12000 = 2.73s

*/

WDTCTL = WDTPW + (WDTSSEL | WDTCNTCL);

}

Another very important concept is knowing when and where to include

header files. Not knowing this can result in extremely poorly written

and impossible to maintain code. The rules for this are very simple:

- A public header file should include all header files required to use

it. This means, if you have defined a structure in header file foo.h

and it is passed as an argument for one of the functions bar.h, bar.h

must include foo.h. You don’t want the caller of this API to have to

know what other include files to include. The reason for this being, if

the caller must include two header files to use one API, the order

matters. In this case, foo.h must be included before bar.h. If it just

so happens the caller has already included foo.h for some other reason,

they may not even notice it is required. This is a maintenance nightmare

for anyone using your code.

- A public header file should include only what is required. Those

giant monolithic header files are impossible to maintain. Users of your

APIs shouldn’t have to care about your implementation. Don’t include

files or types that make this information public because when you change

it, the calling code will have to be updated as well. Include header

files and private types required in the implementation only in the

source file.This makes the code portable and modular. Updating and

improving your implementation is great, forcing to callers to update

their code because of changing in some structure, not so much.

- The last point is no never include header files recursively, meaning

foo.h includes bar.h and vice versa. This will again result in a

maintenance nightmare.

Not too complicated right? The goal is to make these rules second

nature, so keep practising them every single time you write a header

file. And if you catch me not following my own rules, please feel free

to send me a nasty email telling me about it

Back to the code, we also want to separate the configuration data

(TLV) verification. Again, a new header file should be created in the

include/ directory called tlv.h. We remove the declaration of the

_verify_cal_data function from main.c, move it to tlv.h, and rename it

to tlv_verify.

#ifndef __TLV_H__

#define __TLV_H__

/**

* brief Verify the TLV data in flash

* return 0 if TLV data is valid, -1 otherwise

*/

int tlv_verify(void);

#endif /* __TLV_H__ */

Now create the matching source file tlv.c in the src/ directory and

move the implementation from main.c into this file. We will also need to

move the helper function _calculate_checsum into our new file. It will

remain static as it is private to this file.

#include "tlv.h"

#include <msp430.h>

#include <stdint.h>

#include <stddef.h>

static uint16_t _calculate_checksum(uint16_t *address, size_t len);

/**

* brief Verify the TLV data in flash

* return 0 if TLV data is valid, -1 otherwise

*/

int tlv_verify(void)

{

return (TLV_CHECKSUM + _calculate_checksum((uint16_t *) 0x10c2, 62));

}

static uint16_t _calculate_checksum(uint16_t *data, size_t len)

{

uint16_t crc = 0;

len = len / 2;

while (len-- > 0) {

crc ^= *(data++);

}

return crc;

}

The last header file will be board.h, which will require a new API to

initialize and configure the device for the board specific application.

Our prototype will look like this:

#ifndef __BOARD_H__

#define __BOARD_H__

/**

* brief Initialize all board dependant functionality

* return 0 on success, -1 otherwise

*/

int board_init(void);

#endif /* __BOARD_H__ */

Now we can create board.c in the src/ directory, and implement the

API. We will cut everything from the beginning of main until

watchdog_enable (inclusive) and paste it into our new function. Now we

need to clean up the body of this function to use our new APIs. We need

to include board watchdog.h and tlv.h as well as fix up any of the

function calls to reflect our refactoring effort.

#include "board.h"

#include "watchdog.h"

#include "tlv.h"

#include <msp430.h>

/**

* brief Initialize all board dependant functionality

* return 0 on success, -1 otherwise

*/

int board_init(void)

{

watchdog_disable();

if (tlv_verify() != 0) {

/* Calibration data is corrupted...hang */

while(1);

}

/* Configure the clock module - MCLK = 1MHz */

DCOCTL = 0;

BCSCTL1 = CALBC1_1MHZ;

DCOCTL = CALDCO_1MHZ;

/* Configure ACLK to to be sourced from VLO = ~12KHz */

BCSCTL3 |= LFXT1S_2;

/* Configure P1.0 as digital output */

P1SEL &= ~0x01;

P1DIR |= 0x01;

/* Set P1.0 output high */

P1OUT |= 0x01;

/* Configure P1.3 to digital input */

P1SEL &= ~0x08;

P1SEL2 &= ~0x08;

P1DIR &= ~0x08;

/* Pull-up required for rev 1.5 Launchpad */

P1REN |= 0x08;

P1OUT |= 0x08;

/* Set P1.3 interrupt to active-low edge */

P1IES |= 0x08;

/* Enable interrupt on P1.3 */

P1IE |= 0x08;

/* Global interrupt enable */

__enable_interrupt();

watchdog_enable();

return 0;

}

Finally, we need to clean up our main function to call board_init and then update watchdog_pet API.

int main(int argc, char *argv[])

{

(void) argc;

(void) argv;

if (board_init() == 0) {

/* Start blinking the LED */

while (1) {

watchdog_pet();

if (_blink_enable != 0) {

/* Wait for LED_DELAY_CYCLES cycles */

__delay_cycles(LED_DELAY_CYCLES);

/* Toggle P1.0 output */

P1OUT ^= 0x01;

}

}

}

return 0;

}

Now isn’t that much cleaner and easier to read? Is it perfect, no?

But is it better than before, definately. The idea behind refactoring in

embedded systems is to make the application level code as agnostic as

possible to the actual device it is running on. So if we were going to

take this code and run it on a Atmel or PIC, all we

should have

to change is the implementation of the hardware specific APIs.Obviously

in our main this is not the case yet. We have register access to GPIO

pins and an ISR, both of which are not portable code. We could create a

GPIO API and implement all GPIO accesses there, but for now there is no

need. Similarly, we could make an interrupt API which allows the caller

to attach an ISR function to any ISR, as well as enable or disable them.

This type of abstraction is called hardware abstraction and the code /

APIs that implement it are called the hardware abstraction layer (HAL).

Makefiles

Now that the code is nicely refactored, on to the basics of make. The

script invoked by make is called a makefile. To create a makefile, you

simply create a new text file which is named ‘makefile’ (or ‘Makefile’).

Before we begin writing the makefile, lets discuss the basic syntax.

For more information you can always reference the GNU make documentation

found

here.

The basic building block of makefiles are rules. Rules define how an

output is generated given a certain set of prerequisites. The output is

called a target and make automatically determines if the prerequisites

for a given target have been satisfied. If any of the prerequisites are

newer than the target, then the instructions – called a recipe – must be

executed. The syntax of a rule is as follows:

<target> : <prerequisites>

<recipe>

Note that in makefiles whitespace does matter. The recipe must be

tab-indented from the target line. Most editors will automatically take

care of this for you, but if your editor replaces tabs with spaces for

makefiles, make will reject the syntax and throw an error. There should

be only one target defined per rule, but any number of prerequisites.

For example, say we want to compile main.c to output main.o, the rule

might look like this:

main.o: main.c

<recipe>

If make is invoked and the target main.o is newer than main.c, no

action is required. Otherwise, the recipe shall be invoked. What if

main.c includes a header files called config.h, how should this rule

look then?

main.o: main.c main.h

<recipe>

It is important to include all the dependencies of the file as

prerequisites, otherwise make will not be able to do its job correctly.

If the header file is not included in the list of prerequisites, it can

cause the build not to function as expected, and then ‘mysteriously’

start functioning only once main.c is actually changed. This becomes

even more important when multiple source files reference the same

header. If only one of the objects is rebuilt as a result of a change in

the header, the executable may have mismatched data types, enumerations

etc… It is very important to have a robust build system because there

is nothing more frustrating than trying to debug by making tons of

changes that seem to have no effect only to find out that it was the

fault of your build system.

As you can imagine, in a project which has many source files and many

dependences, creating rules or each one manually would be tedious and

most certainly lead to errors. For this reason, the target and

prerequisites can be defined using patterns. A common example would be

to take our rule from above, and apply it to all C files.

%.o: %.c

<recipe>

This rule means that for each C file, create an object file (or file

ending in .o to be specific) of the equivalent name using the recipe.

Here we do not include the header file because it would be nonsensical

for all the headers to be included as prerequisites for every source

file. Instead, there is the concept of creating dependencies which we

look at later.

Makefiles have variables similar to any other programming or

scripting language. Variables in make are always interpreted as strings

and are case-sensitive. The simplest way to assign a variable is by

using the assignment operator ‘=’, for example

VARIABLE = value

Note, variables are usually defined using capital letters as it helps

differentiate from any command line functions, arguments or filenames.

Also notice how although the variable is a string, the value is not in

quotes. You do not have to put the value in quotes in makefiles so long

as there are no whitespaces. If there are you must use double quotes

otherwise the value will be interpreted incorrectly. To reference the

variable in the makefile, it must be preceded with a dollar sign ($) and

enclosed in brackets, for example

<target> : $(VARIABLE)

@echo $(VARIABLE)

Would print out the value assigned to the variable. Putting the ‘@’

sign in front of the echo command tells make not to print out the

command it is executing, only the output of the command. You may be

wondering how it is that the shell command ‘echo’ can be invoked

directly from make. Typically invoking a shell command requires using a

special syntax but make has some implicit rules for command line

utilities. ‘CC’ is another example of an implicit rule whose default

value is ‘cc’ which is gcc. However, this is the host gcc, not our

MSP430 cross compiler so this variable will have to be overloaded.

The value assigned to a variable need not be a constant string

either. One of the most powerful uses of variables is that they can be

interpreted as shell commands or makefile functions. These variables are

often called macros. By using the assignment operator, it tells make

that the variable should be expanded every time it is used. For example,

say we want to find all the C source files in the current directory and

add assign them to a variable.

SRCS=$(wildcard *.c)

Here ‘wildcard’ is a make function which searches the current

directory for anything that matches the pattern *.c. When we have

defined a macro like this where SRCS may be used in more than one place

in the makefile, it is probably ideal not to re-evaluate the expression

every time it is referenced. To do so, we must use another type of

assignment operator, the simply expanded assignment operator ‘:=’.

SRCS:=$(wildcard *.c)

For most assignments, it is recommended to use the simply expanded

variables unless you know that the macro should be expanded each time it

is referenced.

The last type of assignment operator is the conditional variable

assignment, denoted by ‘?=’. This means that the variable will only be

assigned a value if it is currently not defined. This can be useful when

a variable may be exported in the environment of the shell and the

makefile needs that variable but should not overwrite it if it defined.

This means that if you have exported a variable from the shell (as we

did in

lesson 2),

that variable is now in the environment and make will read the

environment and have access to those variables when executing the

makefile. One example where this would be used is to define the path to

the toolchain. I like to install all my toolchains to the /opt

directory, but some people like to install them to the /home directory.

To account for this, I can assign the variable as follows:

TOOLCHAIN_ROOT?=~/msp430-toolchain

That makes all the people who like the toolchain in their home

directory happy. But what about me with my toolchain under /opt? I

simply add an environment variable to my system (

for help – see section 4)

which is equivalent to a persistent version of the export command.

Whenever I compile, make will see that TOOLCHAIN_ROOT is defined in my

environment and used it as is.

Rules can be invoked automatically by specifying macros that

substitute the prerequisites for the target. One of the most common

examples of this is using a macro to invoke the compile rule. To do

this, we can use a substitution command which will convert all .c files

in SRCS to .o files, and store them in a new variable OBJS.

OBJS:=$(SRCS:.c=.o)

This is a shorthand for make’s pattern substitution (patsubst)

command. If there is a rule defined that matches this substitution, make

will invoke it automatically. The recipe is invoked once for each file,

so for every source file the compile recipe will be invoked and object

file will be generated with the extension .o. Pattern substitution, as

well as the many other string substitution functions in make, can also

be used to strip paths, add prefixed or suffixes, filter, sort and more.

They may or may not invoke rules depending on the content of your

makefile.

Rules and variables are the foundations of makefiles. There is much

more but this short introduction is enough to get us started. As we

write our makefile, you will be introduced to a few new concepts.

Writing our Makefile

We made a whole bunch of changes to our code and now compiling from

the command line using gcc directly is not really feasible. We need to

write our first makefile using these principles from earlier. If you

have not yet downloaded the tagged code for this tutorial, now would be

the time. We are going to go through the new makefile line-by-line to

understand exactly how to write one. The makefile is typically placed in

the project root directory, so open it up with a text editor. The

first line starts with a hash (#), which is the symbol used to denote

comments in makefiles. Next we start defining the variables, starting

with TOOLCHAIN_ROOT.

TOOLCHAIN_ROOT?=/opt/msp430-toolchain

Using the conditional variable assignment, it is assigned the

directory of the toolchain. It is best not to end paths with a slash ‘/’

even it is a directory, because when you go to use the variable, you

will put another slash and end up with double slashes everywhere. It

won’t break anything usually, but its just cosmetic. Next we want to

create a variable for the compiler. The variable CC is implicit in make

and defaults to the host compiler. Since we need the MSP430

cross-compiler, the variable can be reassigned to the executable.

CC:=$(TOOLCHAIN_ROOT)/bin/msp430-gcc

Often the other executable inside the toolchain’s bin directory are

defined by the makefile as well if they are required. For example, if we

were to use the standalone linker ld, we would create a new variable LD

and point it to the linker executable. The list of implicit variables

can be found in the GNU make documentation.

Next the directories are defined.

BUILD_DIR=build

OBJ_DIR=$(BUILD_DIR)/obj

BIN_DIR=$(BUILD_DIR)/bin

SRC_DIR=src

INC_DIR=include

We have already create two directories, src and include, so SRC_DIR

and INC_DIR point to those respectively. The build directory is where

all the object files will go and will be created by the build itself.

There will be two subdirectories, obj and bin. The obj directory is

where the individually compiled object files will go, while he bin

directory is for the final executable output. Once the directories are

defined, the following commands are executed:

ifneq ($(BUILD_DIR),)

$(shell [ -d $(BUILD_DIR) ] || mkdir -p $(BUILD_DIR))

$(shell [ -d $(OBJ_DIR) ] || mkdir -p $(OBJ_DIR))

$(shell [ -d $(BIN_DIR) ] || mkdir -p $(BIN_DIR))

endif

The ifneq directive is similar to C, but since everything is a

string, it compares BUILD_DIR to nothing which is the equivalent to an

empty string. Then shell commands are executed to check if the directory

exists and if not it will be created. The square brackets are the shell

equivalent of a conditional ‘if’ statement and ‘-d’ checks for a

directory with the name of the string following. Similar to C, or’ing

conditions is represented by ‘||’. If the directory directory exists,

the statement is true, so rest will not be executed. Otherwise, the

mkdir command will be invoked and the directory will be created. The

shell command is repeated for each subdirectory of build.

Next the source files are saved to the SRCS variable.

SRCS:=$(wildcard $(SRC_DIR)/*.c)

Using the wildcard functions, make will search all of SRC_DIR for any

files that match the pattern *.c, which will resolve all of our C

source files. Next comes the object files. As we discussed earlier, path

substitution can be used to invoke a rule. The assignment

OBJS:=$(patsubst %.c,$(OBJ_DIR)/%.o,$(notdir $(SRCS)))

is the long hand version of what we discussed above but with some

differences. First, the patsubst command is written explicitly. Then the

object file name must be prepended with the OBJ_DIR path. This tells

make that for a given source file, the respective object file should be

generated under build/obj. We must strip the path of the source files

using the notdir function. Therefore, src/main.c would become main.c. We

need to do this because we do not want prepend the OBJ_DIR to the full

source file path, i.e. build/src/main.c. Some build systems do this, and

it is fine, but I prefer to have all the object files in one directory.

One caveat of putting all the object files in one directory is that if

two files have the same name, the object file will get overwritten by

the last file to compile. This is not such a bad thing however, because

it would be confusing to have two files with the same name in one

project. The rule that this substitution invokes is defined later in the

makefile.

Next the output file, ELF is assigned.

ELF:=$(BIN_DIR)/app.out

This is just a simple way of defining the name of location of the

final executable output file. We place it in the bin directory (although

it’s technically not a binary). This file is the linked output of all

the individual object files that exist in build/obj. To understand how

this works we need to look at the next two variables, CFLAGS and

LDFLAGS. These two variables are common practice and represent the

compile flags and linker flags respectively. Lets take a look at the

compiler flags.

CFLAGS:= -mmcu=msp430g2553 -c -Wall -Werror -Wextra -Wshadow -std=gnu90 -Wpedantic -MMD -I$(INC_DIR)

The first flag in here is one we have been using all along to tell

the compiler which device we are compiling for. The ‘-c’ tells gcc to

stop at the compilation step and therefore the linker will not be

invoked. The output will still be an object file containing the machine

code, but the addresses to external symbols (symbols defined in other

objects files) will not yet be resolved. Therefore you cannot load and

execute this object file, as it is only a part of the executable. The

-Wall -Werror -Wextra -Wshadow -std=gnu90 -Wpedantic compiler flags tell

the compiler to enable certain warnings and errors to help make the

code robust. Enabling all these flags makes the compiler very sensitive

to ‘lazy’ coding. Wall for example turns all the standard compiler

warnings, while Wextra turns on some more strict ones. You can find out

more about the exact checkers that are being enabled by looking at the

gcc man page. Werror turns all warnings into errors. For non-syntactical

errors, the compiler may complain using warnings rather than errors,

which means the output will still be generated but with potential

issues. Often leaving these warnings uncorrected can result in undesired

behaviour and are difficult to track down because the warnings are only

issued when that specific file is compiled. Once the file is compiled,

gcc will no longer complain and its easy to forget. By forcing all

warnings to be errors, you must fix everything up front.

In C, there is nothing stopping a source file from containing a

global variable foo, and then using the same name foo, for an argument

passed into one of the functions. In the function, the compiler must

decide which foo to use, which is not right. The compiler cannot

possibly know which variable you are referring to, so enabling Wshadow

will throw an error if shadow variables are encountered, rather than

choosing one.

Finally, -std and -Wpedantic tell the compiler what standard to use,

and what types of extensions are acceptable. The gnu90 standard is the

GNU version of the ISO C90 standard with GNU extensions and implicit

functions enabled. I would have preferred to use C90 (no GNU extensions –

also called ansi) but the msp430.h header and intrinsic functions do

not play nice with this. Wpedantic tells the compiler to accept only

strict ISO C conformance and not accept non-standard extensions other

than those that are defined with prepended and appended with double

underscores (think __attributes__). So together these two parameters

mean no C++ style comment (“//”), variables must be defined at the

beginning of the scope (i.e. right after an opening brace) amongst other

things.

The -MMD flag tells the compiler to output make-compatible dependency

data. Instead of writing the required header files explicitly as we did

earlier, gcc can automatically determine the prerequisites and store

them in a dependency file. When we compile the code, make will check not

only the status of the file, but also of all the prerequisites stored

in its respective dependency file. If you look at a dependency file

(which have the extension .d as we will see later), it is really just a

list of the header files included in the source file. Finally, the -I

argument tells gcc in what directory(ies) to search for include files.

In our case this is the variable INC_DIR which resolves to the the

include/ directory.

Under the linker flags variable LDFLAGs, we only have to pass the

device type argument. The default linker arguments are sufficient at

this time.

LDFLAGS:= -mmcu=msp430g2553

Next there is the DEPS variable, which stands for dependencies.

DEPS:=$(OBJS:.o=.d)

As mentioned earlier, the dependency rule takes the object file and

creates a matching dependency file under the build/obj directory. This

macro is the same as the shorthand version of patsubst which we saw

earlier for OBJS. The rule to generate the dependency file is implicit.

Finally the rules. Rules typically result in the output of a file

(the target), however sometimes we need rules to do other things. These

targets are called PHONY, and should be declared as such. The target

all is an example of a PHONY.

.PHONY: all

all: $(ELF)

We don’t want a file named ‘all’ to be generated, but it is still a

target which should be executed. The target all means perform the full

build. The prerequisite of the target all is the the target ELF, which

is the output file. This means in order for ‘make all’ to succeed, the

output binary must have been generated successfully and be up to date.

The ELF target has its own rule below:

$(ELF) : $(OBJS)

$(CC) $(LDFLAGS) $^ -o $@

It’s prerequisites are all the object files that have been created

and stored in the variable OBJS. Now the recipe for this rule brings us

back to the linker issue. The compiled object files must be linked into

the final executable ELF so that all addresses are resolved. To do this

we can use gcc (CC) which will automatically invoke the linker with the

correct default arguments. All we have to do is pass the LDFLAGS to CC,

and tell it what the input files are and what the output should be. The

recipe for the link command introduced a new concept called automatic

variables. Automatic variables can be used to represent the components

of a rule. $@ refers to the target, while $^ refers to all the

prerequisites. It is convenient way to write generic rules without

explicitly listing the target and prerequisites. The equivalent for this

recipe without using the automatic variables would be

$(ELF) : $(OBJS)

$(CC) $(LDFLAGS) $(OBJS) -o $(ELF)

In order to meet the prerequisites of OBJS for the ELF target, the

individual sources must be compiled. This is where the path substitution

comes in. When make tries to resolve the prerequisite, it will see the

path substitution in the assignment of the OBJ variable and invoke the

final rule:

$(OBJ_DIR)/%.o : $(SRC_DIR)/%.c

$(CC) $(CFLAGS) $< -o $@

This rule takes all the source files stored in the SRCS variable and

compiles them with the CFLAGS arguments. In this case, the rule is

invoked for each file, so the target is each object file and the

prerequisite is the matching source file. This leads us to another

automatic variable $< which is the equivalent to taking the first

prerequisite, rather than all of them as $^ does. The rule must match

our path substitution, so thats why the target must be prepended with

the OBJ_DIR variable and the prerequisites with the SRC_DIR variable.

The last rule is the clean rule, which is another PHONY target. This

rule simply deletes the entire build directory, so there are no objects

or dependencies stored. If you ever want to do a full rebuild, you would

perform a make clean and then a make all, or in shorthand on the

command line:

make clean && make all

The last line in the makefiles is the include directive for the

dependency files. In make the include directive can be used to include

other files as we do in C. The preceding dash before include tells make

not to throw an error if the files do not exist. This would be the case

in a clean build, since the dependencies have yet to be generated. Once

they are, make will use them to determine what to rebuild. Open up one

of the dependency files to see what it contains. – take main.d for

example:

build/obj/main.o: src/main.c include/board.h include/watchdog.h

This is really just another rule the compiler has generated stating

that main.o has the prerequisites main.c, board.h, and watchdog.h. The

rule will automatically be invoked by make when main.o is to be

generated. System header files (ie libc) are not included. The include

directive must be placed at the end of the file so as not to supersede

the default target – all. If you place the include before the target

all, the first rule invoked with be the dependencies, and you will start

to see weird behaviour when invoking make without explicit targets as

arguments. By playing including the dependency rules at the end,

invoking ‘make’ and ‘make all’ from the command line are now synonymous.

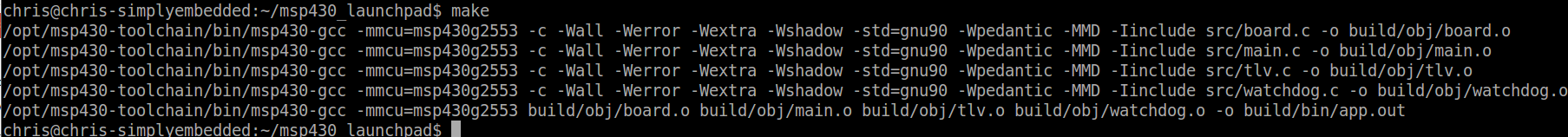

When we execute make from the command line, this is what the output

should look like.

From the output you can see exactly what we have discussed. Each

source file is compiled using the arguments defined by CFLAGS into an

object file and stored under build/obj. Then, all these object files are

linked together to create the final executable app.out. This is the

file that is loaded to the MSP430. The functionality is exactly the same

as the previous lesson. Some homework for those of you who are

interested: create a new rule in the makefile called ‘download’ which

will flash the output to the MSP430 automatically using mspdebug. The

answer will be available in the next lesson.

In the current code base the main application performs a very

simple task, it blinks an LED continuously until a user presses a button

and then it stops. The blinking of the LED is implemented by a simple

while loop and a delay. While in this loop, no other code can be

executing, only the toggling of the LED. This is not a practical

solution to performing a periodic task, which is a basic and common

requirement of an embedded system. What if we also wanted to take a

temperature measurement every 5 seconds. Trying to implement both of

these using loops and delays would be complicated and most likely

inaccurate. To address this issue, we will leverage what we learned

about interrupts and implement a timer. Timers are a fundamental concept

in embedded systems and they have many use cases such as executing a

periodic task, implementing a PWM output or capturing the elapsed time

between two events to name a few. Depending on the architecture, some

timers may have specific purposes. For example, on ARM cores, there is a

systick timer which is used to provide the tick for an operating

system. On most ARM and Power Architecture cores, there is a PIT –

periodic interval timer, which can be used for any type of periodic

task. There are also timers used as a time base, i.e. to keep track of

time for the system clock. At hardware level however, they pretty much

operate using the same principle. The timer module has a clock input,

which is often configurable (internal / external, clock divider etc..).

On each clock pulse, the timer either increments or decrements the

counter. When the counter reaches some defined value, an interrupt

occurs. Once the interrupt is serviced, the timer may restart counting

if it is a periodic timer, or it may stop until reconfigured. For timers

used as a time base, the interrupt may not be required and the timer

shall tick indefinitely and may be queried by software when required. To

save resources, the MSP430 has combined most of this functionality into

two timer modules – Timer_A and Timer_B. They share most of the same

functionality, but there are some differences, notably that timer B can

be configured to be an 8, 10, 12, or 16-bit timer while Timer_A is only a

16-bit timer. The other differences between Timer_A and Timer_B can be

found in section 13.1.1 of the family reference manual. In this lesson,

we will be using Timer_A to implement a generic timer module which can

be used by the application to invoke periodic or one-shot timers. Then

we will modify our application to replace the current implementation of

the blinking LED to use timers so that in between blinking the LED the

CPU can perform other tasks.

Timer_A theory

Before writing any code we must understand how Timer_A works and what

registers are available to configure and control this peripheral.

Timer_A is a 16-bit timer, which means it can increment to 0xFFFF (65536

cycles) before it rolls over. Both timers on the MSP430 have both

capture and compare functionality. In fact, there are 3 timer blocks in

Timer_A which can be independently configured to either mode. Capture

functionality is used to time events, for example, the time between LED

last toggled and the switch is pressed. In this scenario, the timer runs

until one of these two events happen at which point the current value

of the timer is stored in a capture register and an interrupt is

generated. Software can then query the saved value and store it until

the next interrupt is generated. The time difference between the two

events can then be calculated in terms of ticks. A tick at the hardware

level is one clock cycle, or the time between timer a increment or

decrement. So if the timer was clocked at 1MHz, each tick would be 1us.

The other mode which these timers support is compare mode, which is the

standard use for a timer and the one we will be using in this lesson.

Its is called compare mode because the timer value is compared against

the interval assigned by software. When they match, the time has expired

and an interrupt is generated. If the timer is configured as a periodic

timer, it will restart the cycle again. The timer module has 3 modes

which must be configured correctly for the specific application

- Up mode: timer will start with a value of zero and increment until a software defined value

- Continuous mode: timer will start at zero and increment until it rolls over at 0xFFFF

- Up/Down mode: timer will start at zero, increment until a defined value, and the start decrementing back to zero

In our case, we will be be using

the timer in up mode because we want to define an interval which is a

minimum timer resolution for our application. Now lets take a look at

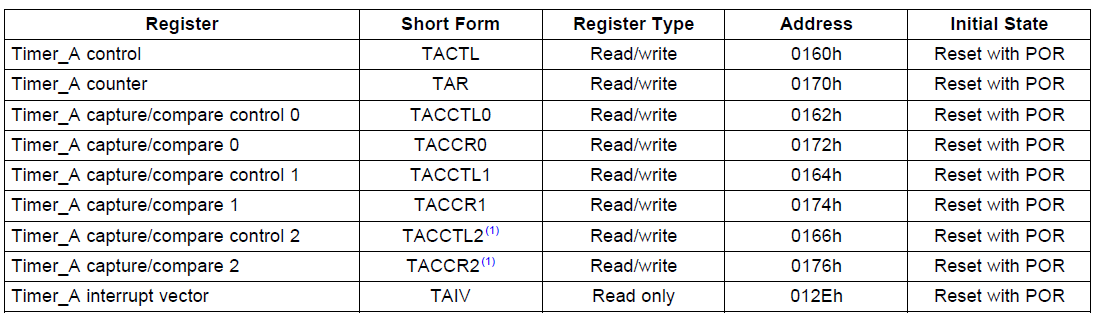

how to configure Timer_A. The following table from the reference manual

defines the registers associated with the module.

TI MSP430x2xx Family Reference Manual (SLAU144J)

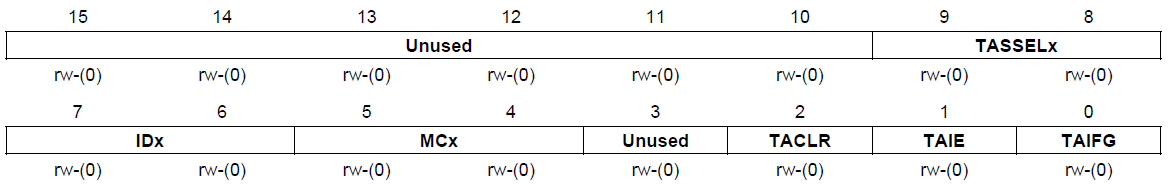

Timer_A control (TACTL) is the general timer control register. It is

used to set up the timer clock source, divider clock mode and

interrupts. The register definition is as follows:

TI MSP430x2xx Family Reference Manual (SLAU144J)

- TASSELx: timer clock source select

- 00 TACLK (external Timer_A clock input)

- 01 ACLK

- 10 SMCLK

- 11 INCLK (device specific)

- IDx: input clock divider

- MCx: timer module mode control

- 00 Off (timer is halted)

- 01 Up mode

- 10 Continuous mode

- 11 Up/down mode

- TACLR: Timer_A clear

- 0 No action

- 1 Clear the current timer value as well as the divider and mode

- TAIE: Timer_A interrupt enable

- 0 interrupt disabled

- 1 interrupt enabled

- TAIFG: Timer_A interrupt flag

- 0 No interrupt pending

- 1 Timer interrupt pending

It is important to note that whenever modifying timer registers, it

is recommended to halt the timer first using the TACLR bit, and then

reset the register with the required parameters. This ensures that the

timer does not expire unexpectedly and cause an interrupt or some other

unintended consequence.

The Timer_A counter register (TAR) is the 16-bit register which

contains the current value of the timer. Usually software would not have

to read or write to this register unless it is being used as a time

base. In most cases an interrupt would indicate when the timer expires,

and since software must set the interval, the value of this register at

that time would be known.

Timer_A capture/compare register x (TACCRx) and Timer_A

capture/compare control register x (TACCTLx) are three pairs of the same

registers. Remember earlier we saw that the Timer_A module has three

capture / compare blocks that can be independently configured? These are

the registers to do so. Software can utilize one, two or all three

blocks simultaneously to perform different functions using a single

timer. This make efficient use of the microcontroller’s resources since

there is only one clock source and divider for all three. But each block

can be configured to have a different timeout if in compare mode, or

can be configure to capture mode. TACCRx is a 16-bit register which has

two functions:

- Compare mode: the value set by software in this register will

determine the interval at which the timer will expire when in up mode,

or which the timer will start decrementing in up/down mode. If the timer

is in continuous mode, this register has no effect on the interval. The

value in this register is compared against that in TAR.

- Capture mode: this register will hold the value when the capture

event occurs. The value from TAR is copied to this register to be read

by software.

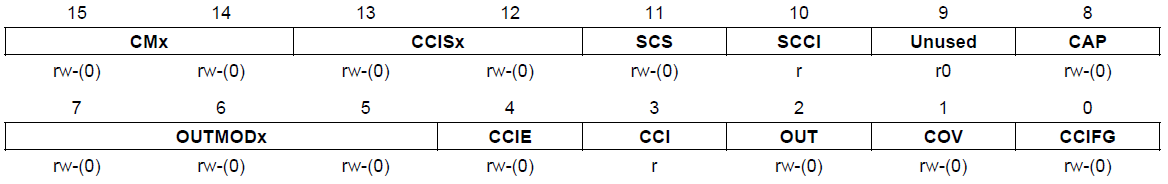

TACCTLx is the control register for each of the block and contains the following fields:

TI MSP430x2xx Family Reference Manual (SLAU144J)

- CMx: capture mode – only valid when block is configured as a capture timer

- 00 No capture

- 01 Capture on rising edge of the timer clock

- 10 Capture on the falling edge of the timer clock

- 11 Capture on both edges of the timer clock

- CCISx: capture input selection – ie what input triggers the capture event

- 00 CCIxA (device specific)

- 01 CCIxB (device specific)

- 10 GND (ground)

- 11 Vcc

- SCS: synchronize capture input signal with the timer clock

- 0 Do not synchronize (asynchronous capture)

- 1 Synchronize the input with the timer (synchronous capture)

- SCCI: synchronized capture/compare input

- The latched value of the input at time of capture

- CAP: capture/compare mode selection

- 0 Compare mode

- 1 Capture mode

- OUTMODx: Timer_A can perform actions to specific output pins

automatically in hardware (no ISR required). This field sets the desired

action

- 000 OUT bit value (see below)

- 001 Set

- 010 Toggle/reset

- 011 Set/reset

- 100 Toggle

- 101 Reset

- 110 Toggle/set

- 111 Reset/set

- CCIE: capture/compare interrupt enable

- 0 Interrupt disabled

- 1 Interrupt enabled

- CCI: value of input signal of capture/compare module

- OUT: output value for OUTMODx = 000

- 0 Output is low

- 1 Output is high

- COV: capture overflow – timer overflowed before capture event occurs

- 0 No capture overflow

- 1 Capture overflow occured

- CCIFG: capture/compare interrupt flag

- 0 No pending interrupt

- 1 Interrupt is pending

A few notes on this register. First is the concept of timer inputs

and outputs. Each capture / compare block can select an input or output

depending on the mode. In capture mode, an input is configured to

trigger the capture. In compare mode, an output can be selected to

toggle, clear, set an output pin etc.. upon timer expiry. The input is

selected using the CCISx register. The pins for input/output must be

configured correctly as indicated in the pin muxing table in the

datasheet (remember

lesson 4).

Up to two inputs can be configured for the capture blocks – one at a

time. The output pin is selected only through the pin muxing. The

OUTMODx field is used to determine what action to take on the output

pin. There are more details on what each of them mean in table 12-2 of

the family reference manual. In this tutorial we will not be using these

features, this is just a quick overview so that if you do need to use

them you know where to start. The second point to discuss is that of

interrupts. Each of the capture/compare blocks have their own separate

interrupt enable and interrupt pending fields, in addition to the

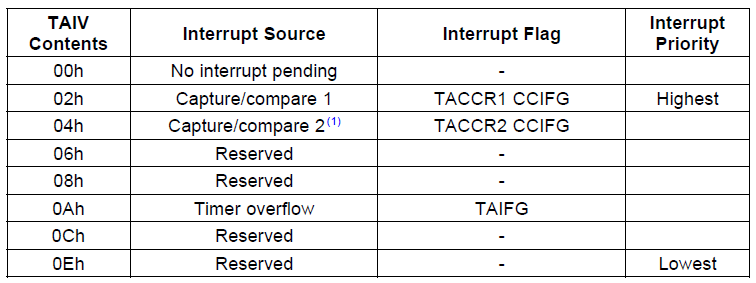

generic one for Timer_A in TACTL. There is a caveat however. Each of

these blocks do not source their own interrupt vector. In fact, there is

only two interrupt vectors for the whole module. The first is for

TACCR0 which has the higher priority of the two. It also has the lowest

interrupt latency, and requires the least processing in the ISR.

Therefore this interrupt would be used in applications where accuracy of

the timer is more important. This interrupt fires exclusively with

TACCR0[CCIE] and cleared using TACCR0[CCIFG]. The rest of the interrupts

all source the same IRQ called TAIV. It is not uncommon to package many

interrupt sources into one IRQ and then provide a register which

summarizes the all the flags. In the ISR, the software would read this

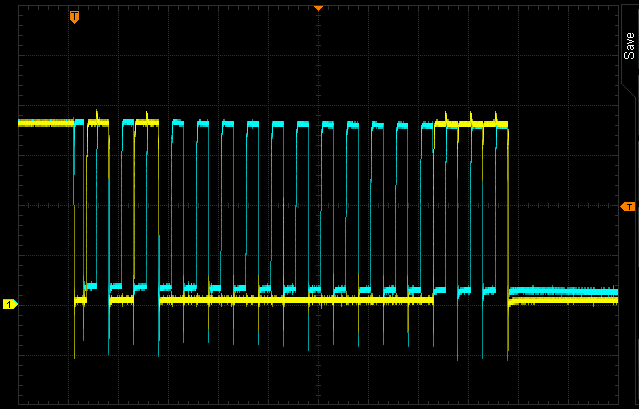

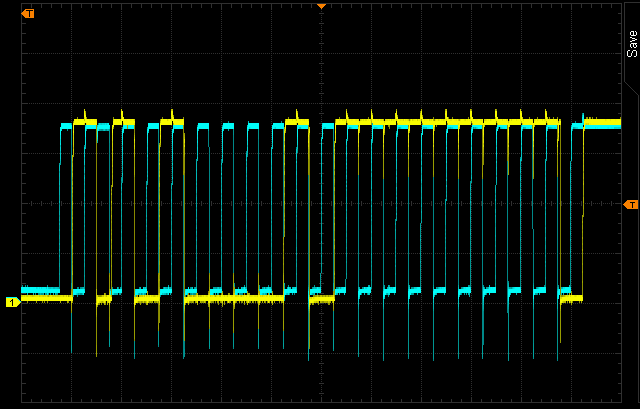

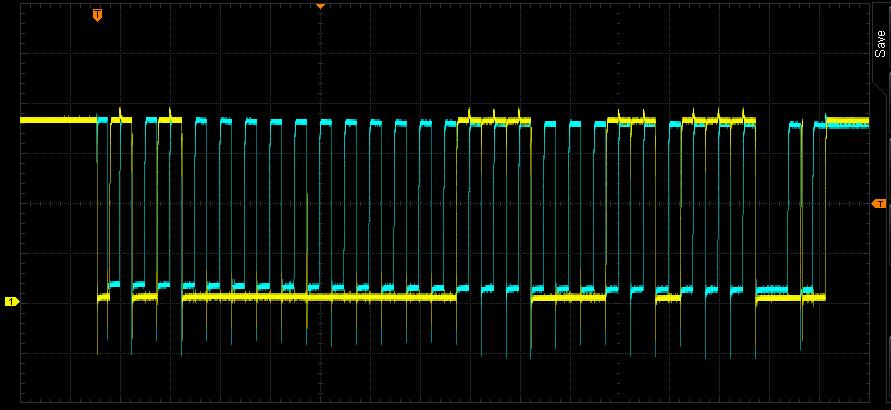

register to determine the source, and respond accordingly. The TAIV